Blender Depth Maps in Godot

This tutorial describes a method of using high quality, pre-rendered content from Blender in an orthographic, fixed camera angle game in Godot, mixed with real 3D assets that interact with the fake 3D scene in a consistent way, moving in front of and behind the scenery in the right places. This technique allows artists to create highly detailed scenery and other static elements, with the advanced rendering and lighting offered by Blender, without overloading the Godot game engine, therefore enabling your game to run on a wider range of devices, and still look great.

The technique imposes some constraints on the style of game you can create, as the camera angle in the Godot game has to match the camera used to render out the assets in Blender, for this example, I use a simple orthographic camera, as it makes a lot of things easier. This technique allows you to create games not dissimilar to Homescapes and its ilk.

There are many aspects to coherently integrating pre-rendered graphics into a 3D scene, this tutorial only covers the inclusion of the pre-rendered art and depth buffer. It does not cover interaction of 3D light sources with the pre-rendered assets, or shadow casting. If there is enough interest, and I can work out a technique for doing it, I may follow-up with additional tutorials in this series to cover these aspects later.

Blender

The first task is to get the pre-rendered assets out of Blender in a format that can be used in Godot. As previously explained, as the depth buffer is created from a fixed camera angle in Blender, it's imperative that the camera matches in Godot, otherwise the illusion will not hold. The easiest way to achieve this is to setup your orthographic camera in Blender, and export it as glTF to import into Godot, this will get you a matching camera setup in Godot. At the time of writing, the glTF exporter from Blender places the camera into a parent node that sets the position and orientation of the camera, while the camera itself has an identity transform. This has a secondary benefit, in that it's easy to move the camera in local space in the Z plane, to get panning effects, and zoom using the size parameter, without affecting the technique, more on this later.

In Blender, create an empty general scene, select the default cube and delete it. To place the camera, select the default camera, press 'N' to open the sidebar, make sure the 'Item' panel is visible and set the properties as follows...You can, of course, change these as you see fit, the rotation settings make for a good default isometric camera when changed to orthographic, these numbers work as a starting point, and for the remainder of this tutorial.

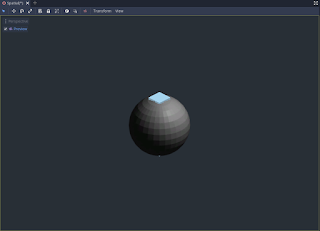

Select the 'Object Data Properties' panel in the properties editor, and change the lens type to Orthographic, the orthographic scale to 7.0, and ensure the limits are visible in the Viewport Display section.Press 'Shift+A' to add a new object to the scene, and choose Mesh/Ico Sphere. Leave the sphere at the default size, it should be fine for this example. To check, press '0' on your keypad (or select the View menu, then Viewpoint-->Camera) to view from the camera, it should look something like this.

In the viewport, press '1' on your keypad (or select the View menu, then Viewpoint-->Front), and then press '4' on your keypad twice, (or select the View menu, then Navigation-->Orbit Left). Finally, switch to orthographic view with '5' on your keypad (or the View menu, then Perspective/Orthographic), This should give you a side on view of the camera and sphere, something like this.

In the 'Object Data Properties' panel, adjust the Clip Start and End values until the markers are just either side of the sphere, this reduces the depth range that has to be captured in the depth buffer, which can help with increasing the accuracy of the depth map, not strictly necessary, but good practice. Take note of the values, I found 34 and 38 to be about right, should give you something like this (note: I zoomed and panned to get that view).

In the 'Output Properties' panel, set the output resolution to 512x256. In the 'Render Properties' panel, set the Film to transparent, and the Color Management View Transform to Raw. If you render now, you'll get something like this.

This is the color pass, that we'll be using in Godot. However, we also need a depth pass that is in a format that is easy to use in Godot, for that we'll be switching to the Blender compositing system. Select the Image menu and save the image as a .PNG now, call it color.png.The two math nodes in the middle are a subtract and a divide. These are used to normalise the depth information to between 0 and 1 between the clip start and end depths. The values map to the camera clip start and end values. The subtract is just the clip start, we are subtracting that to shift the 0 value to the clip start depth, the divide value is the clip end minus the clip start, this scales the rest of the values down so that anything between the clip start and clip end ranges gets mapped into the 0...1 range. So, for the clip settings used above, the subtract is 34, the divide is 4 (38-34). I've added a viewer node so that you can see the resulting depth map.

Now, back in the 'Output Properties' panel, ensure the Post Processing Compositing flag is set, and re-render the image. This time you should see something like this.

This needs to be saved as the depth buffer, but do not save it as a .PNG, that would lose too much information to be useful, as the depth values would be quantised down to 8 bit integers. Instead, choose to save it as OpenEXR, with Float (Full) as the color depth, stick with RGBA as the color for now, we'll return to this later, call this image depth.exr.

For now, if you need to re-render the color pass, just turn the compositing flag on and off in the Post Processing panel.

The final step in Blender is to output a glTF2 version of the scene to make it easier to get the matching camera into Godot. Choose Export-->glTF 2.0 (.glb/.gltf) from the File menu. Ensure the option panel is visible by pressing 'N', and in the 'Include' panel, select 'Cameras'. Then export to camera.glb.

That's all we need at the moment from the Blender side, now onto Godot.

Godot

In Godot, create a new project using GLES3, create a 3D scene to get a Spatial root. Make sure the camera.glb is in your project folder. Right click on the Spatial and choose merge from scene, choose the camera.glb that you exported from Blender, and when shown a list of nodes to merge, choose the 'Camera' and its child 'Camera_Orientation'. This should bring the camera into your scene, save the scene.

Make sure the depth.exr image and color.png images are in your project folder. Select depth.exr and go

to the Import tab. Make sure the Mode is set to Lossless, Filter is off, Detect 3D is off, and Fix Alpha Bord is off.

Create a Sprite3D node under the root Spatial. Drag the color.png onto the Texture slot to test out, we'll be replacing the shader shortly, but this helps place the object.

Note: it seems that the Texture channel on the Sprite3D is used to determine the size from the image size, so it's imperative that this texture matches the albedo texture you will apply in the custom shader later.

In the Flags section of the Sprite3D Inspector panel, set the Billboard to Enabled. This won't have any effect once the custom shader is applied, but again, for now, it helps visualise.

For the Pixel Size of the Sprite3D, I've wrestled with this for a while, and the best I've come up with so

far is the following, if I discover any new information, I'll update later, but this seems to work for me in all cases I've tried so far. I set the Pixel Size to be the Orthographic Scale value from Blender divided by the major size of the output image, in this case, that's 7.0 and 512, so 7.0/512 = 0.0137.

For testing, create a cube in the scene, we'll use this to check the depth buffer operation once that's in place. Create a child of the root Spatial of type MeshInstance. In the Mesh field, choose New CubeMesh. Select the created mesh, and set the Size parameters to 0.5, 2, 0.5. This creates a tall, thin cube going through the Sprite3D. In the main view, you should see something like this.

You can see the cube intersects the Sprite3D as expected, as the Sprite3D is just a camera oriented plane at the moment. This is where the magic happens.

In the Sprite3D Inspector, unfold the Geometry panel, and create a new ShaderMaterial in the Material Override field. Select it to edit, and in the Shader field, select New Shader, select it to edit. This will open the shader editor with an empty shader, copy the following code into that shader.

shader_type spatial;render_mode blend_mix,depth_draw_opaque,cull_back,unshaded;uniform vec4 albedo : hint_color = vec4(1,1,1,1);uniform sampler2D texture_albedo : hint_albedo;uniform sampler2D texture_depth;uniform vec3 uv1_scale = vec3(1,1,1);uniform vec3 uv1_offset;uniform float near;uniform float far;void vertex() {UV=UV*uv1_scale.xy+uv1_offset.xy;MODELVIEW_MATRIX = INV_CAMERA_MATRIX *mat4(CAMERA_MATRIX[0],CAMERA_MATRIX[1],CAMERA_MATRIX[2],WORLD_MATRIX[3]);MODELVIEW_MATRIX = MODELVIEW_MATRIX *mat4(vec4(length(WORLD_MATRIX[0].xyz), 0.0, 0.0, 0.0),vec4(0.0,length(WORLD_MATRIX[1].xyz), 0.0, 0.0),vec4(0.0, 0.0,length(WORLD_MATRIX[2].xyz), 0.0),vec4(0.0, 0.0, 0.0, 1.0));}void fragment() {vec2 base_uv = UV;float d1 = texture(texture_depth, base_uv).r;float depth = -d1;vec4 viewSpace = vec4(0.0, 0.0, (depth * (far - near)) - near, 1.0);vec4 worldSpace = CAMERA_MATRIX * viewSpace;vec4 translation = WORLD_MATRIX * vec4(0.0, 0.0, 0.0, 1.0);worldSpace.x += translation.x;worldSpace.z += translation.z;vec4 viewSpace2 = INV_CAMERA_MATRIX * worldSpace;vec4 clipSpace = PROJECTION_MATRIX * viewSpace2;clipSpace.z = 0.5 * (clipSpace.z + 1.0);vec4 albedo_tex = texture(texture_albedo,base_uv);ALBEDO = albedo.rgb * albedo_tex.rgb;ALPHA = albedo.a * albedo_tex.a;DEPTH = clipSpace.z;}

This will add some entries in the Shader Param section of the material. In the Near and Far parameters, enter the Clip start and end values from Blender, in this case 34 and 38. Then drag the color.png texture onto the Texture Albedo field, and the depth.exr image onto the Texture Depth field.

The shader does the work of interpreting the information in the depth map and setting the correct depth to fake the 3D effect. The vertex shader simply sets up the UV, and then applies the billboarding to the incoming vertex data to ensure it is always aligned towards the camera. The fragment shader, reads out the depth information from the OpenEXR depth map, and the remaps to view space. The information in the depth map is in the range 0..1 where 0 is the clip start in Blender, and 1 is the clip end. Using the near and far information set in the shader. It then converts that to world space to be shifted in X and Z before transformation back into clip space of the Godot camera, which is the space required to use in the z-buffer. The rest is just applying the color map verbatim, with no lighting or adjustment.

Voila! Nothing?!? It still looks the same. This is because the editor camera doesn't match the camera you created from Blender. The easiest way to address this is to select the Camera_Orientation node, and in the viewport, click Preview to use the camera in the viewport. You should now see this.

If you grab the MeshInstance while the camera is still active, and use the Transform panel in the Inspector to move it in X and Z you should see it interacting with the fake depth map, like so.

The Blender and Godot files for this example can be found here, Zip File

This is far from a perfect solution, and I'm certain there are things I'm doing that could be done better, or even things that I'm doing that are simply wrong, but it works for my needs as they stand right now and I hope it proves to have some value, even if only as a guide towards a better solution, for someone else out there. As always, any suggestions and comments are more than welcome.

I hate when I find a seemingly obscure blog post about something that I'm really interested in and it's basically a dead end. I want to get this exact effect but am currently struggling with light and shadow. Sadly the other blog you linked also doesn't touch on those except with some vague hints at possible solutions (which I lack the skill to work out by myself).

ReplyDeleteIf you are still alive and have worked on this some more, I sure would love to read about it. In any case, thanks for the write-up of this technique; works like a charm!

Hi, still here, but I abandoned this approach as it was too much work for too little gain. I've switched to a full 3D approach now. Sorry.

DeleteI was thinking the same. This setup works well, but doing light and shadow is beginning to seem insurmountable, certainly at my skill level. I'm fond of the style in general, but there is a distinct sense of getting the worst of both worlds. All the complexity of 3D with none of the conveniences.

DeleteThank you for taking the time to reply though! And this is still a neat technique, who knows I may come back to it at a later point.